-

CPU Cache and Side-Channel Attacks: A Silent Threat in Modern Computing

1. Introduction: When Speed Becomes a Double-Edged Sword The CPU cache—L1, L2, and L3—is designed to make computing faster. It keeps frequently used data close to the processor, drastically reducing memory latency and improving performance. But this performance boost comes with a critical trade-off: it opens the door to side-channel attacks. These attacks don’t…

-

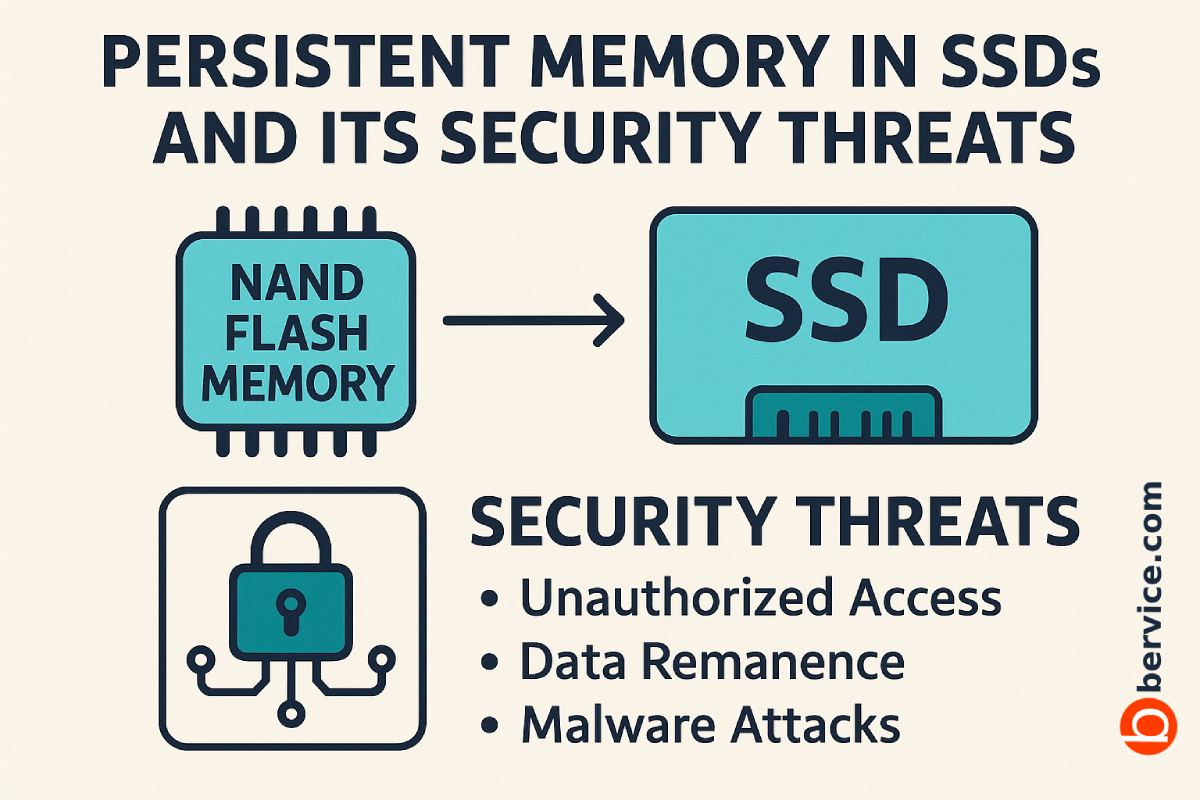

Persistent Memory in SSDs and Its Security Threats

1. Introduction: The Rise of Persistent Memory In recent years, persistent memory technologies have blurred the line between traditional storage and volatile memory. Unlike conventional DRAM, persistent memory retains data even after power is removed, combining low latency, high throughput, and non-volatility. Modern solid-state drives (SSDs) increasingly integrate persistent buffers and caches to improve…

-

AI Privacy and Security: Building a Safer Future

The rapid rise of AI and autonomous AI Agents is forcing humanity to face one uncomfortable fact: granting these systems access to personal data is not just a convenience — it’s a major security gamble. Every “yes” you give to an AI service is effectively opening another door to your digital identity. Let’s be…

-

The Future of AI: Massive Models or a Swarm of Small Intelligent Agents?

Over the past decade, artificial intelligence has experienced a seismic shift from narrow, task-specific systems to powerful general-purpose models. The rapid rise of large language models (LLMs) like GPT-4 and Claude 3 has convinced many that the future of AI belongs to a handful of massive, centralized models. But this vision is being challenged…

-

MCP Servers in AI: The Backbone of Intelligent Agent Orchestration

1. What MCP Servers Are MCP stands for “Model Context Protocol.” MCP servers act as bridges between AI models and real-world tools or data sources. Instead of forcing an AI model to “know” or “store” everything internally, MCP provides a standardized protocol that lets the model call external systems in a structured way. Think…